2024-07-12

한어Русский языкEnglishFrançaisIndonesianSanskrit日本語DeutschPortuguêsΕλληνικάespañolItalianoSuomalainenLatina

- Due to my limited level, errors and omissions are inevitable. I welcome your criticism and correction.

- For more exciting content, click herePython daily operationsColumn,OpenCV-Python small applicationColumn,YOLO SeriesColumn,Natural Language ProcessingColumn or mineHomepageCheck

- Face disguise detection based on DETR

- YOLOv7 trains its own data set (mask detection)

- YOLOv8 trains its own dataset (soccer ball detection)

- YOLOv5: TensorRT accelerates YOLOv5 model reasoning

- YOLOv5:IoU、GIoU、DIoU、CIoU、EIoU

- Playing with Jetson Nano (V): TensorRT accelerates YOLOv5 target detection

- YOLOv5: Add SE, CBAM, CoordAtt, ECA attention mechanism

- YOLOv5: Interpretation of yolov5s.yaml configuration file, adding small target detection layer

- Python converts COCO format instance segmentation dataset to YOLO format instance segmentation dataset

- YOLOv5: Use version 7.0 to train your own instance segmentation model (for instance segmentation of vehicles, pedestrians, road signs, lane lines, etc.)

- Use Kaggle GPU resources to experience the Stable Diffusion open source project for free

- YOLOv10 was built by researchers from Tsinghua University based on the Ultralytics Python package and introduces a new approach to real-time object detection that addresses deficiencies in post-processing and model architecture in previous YOLO versions. By eliminating non-maximum suppression (NMS) and optimizing various model components, YOLOv10 achieves state-of-the-art performance while significantly reducing computational overhead. Extensive experiments demonstrate its superior accuracy and latency trade-offs at multiple model scales.

- [1] YOLOv10 source code address:https://github.com/THU-MIG/yolov10.git

- [2] YOLOv10 paper address:https://arxiv.org/abs/2405.14458

- Overview

The goal of real-time object detection is to accurately predict the category and location of objects in an image with low latency. The YOLO family has been at the forefront of this research due to its balance between performance and efficiency. However, the reliance on NMS and the inefficiency of the architecture hinder optimal performance. YOLOv10 addresses these issues by introducing a consistent dual-task for NMS-free training and an overall efficiency-accuracy driven model design strategy.- Architecture

The architecture of YOLOv10 builds on the strengths of previous YOLO models while introducing several key innovations. The model architecture consists of the following components:

- Backbone: The backbone in YOLOv10 is responsible for feature extraction and uses an enhanced version of CSPNet (Cross Stage Partial Network) to improve gradient flow and reduce computational redundancy.

- Neck: The neck is designed to aggregate features of different scales and pass them to the head. It includes PAN (Path Aggregation Network) layers for effective multi-scale feature fusion.

- One-to-Many Head: Generates multiple predictions for each object during training, providing rich supervision signals and improving learning accuracy.

- One-to-One Head: Generates a single best prediction for each object during inference to eliminate the need for NMS, thereby reducing latency and improving efficiency.

- Key Features

- NMS-Free Training: Utilize consistent dual assignment to eliminate the need for NMS and reduce inference latency.

- Holistic Model Design: Comprehensively optimize various components from the perspective of efficiency and accuracy, including lightweight classification head, spatial channel decoupling downsampling, and rank guide block design.

- Enhanced Model Capabilities: Combining large kernel convolution and partial self-attention modules improves performance without significant computational cost.

- Model Variants: YOLOv10 has multiple models to meet different application needs:

- YOLOv10-N: Nano version for extremely resource-constrained environments.

- YOLOv10-S: Small version balances speed and accuracy.

- YOLOv10-M: Medium version for general-purpose use.

- YOLOv10-B: Balanced version with increased width for higher accuracy.

- YOLOv10-L: Large version for higher accuracy at the cost of increased computational resources.

- YOLOv10-X: Extra-large version for maximum accuracy and performance.

- familiarPython

torch==2.0.1

torchvision==0.15.2

onnx==1.14.0

onnxruntime==1.15.1

pycocotools==2.0.7

PyYAML==6.0.1

scipy==1.13.0

onnxsim==0.4.36

onnxruntime-gpu==1.18.0

gradio==4.31.5

opencv-python==4.9.0.80

psutil==5.9.8

py-cpuinfo==9.0.0

huggingface-hub==0.23.2

safetensors==0.4.3

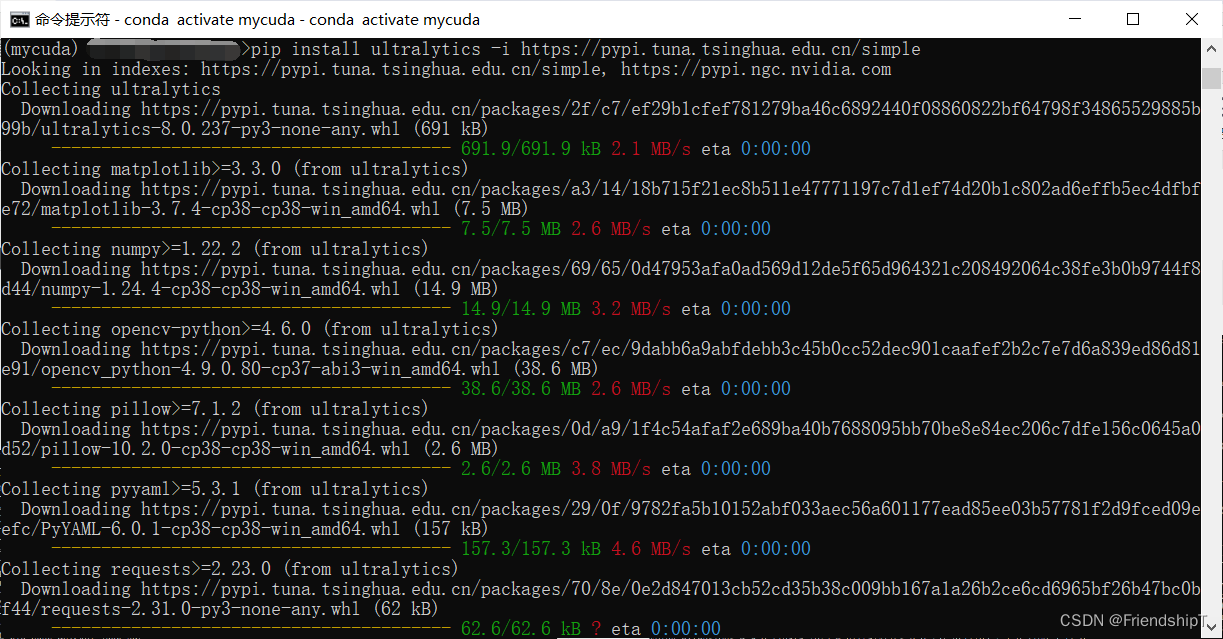

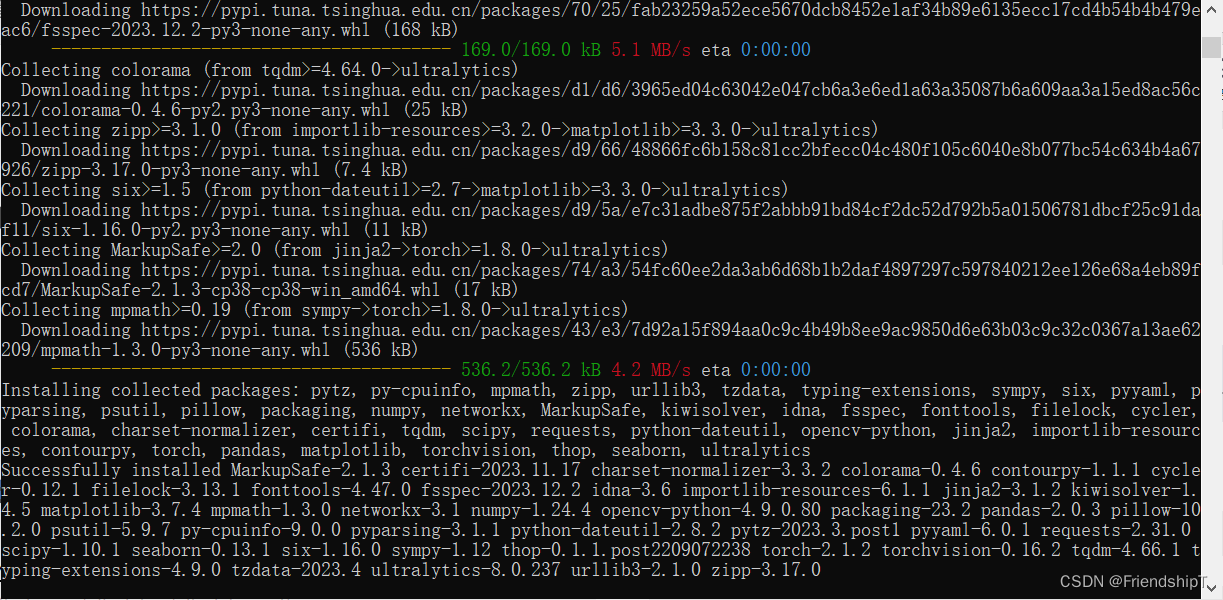

pip install ultralytics

# 或者

pip install ultralytics -i https://pypi.tuna.tsinghua.edu.cn/simple # 国内清华源,下载速度更快

- YOLOv10 source code address:https://github.com/THU-MIG/yolov10.git

git clone https://github.com/THU-MIG/yolov10.git

cd yolov10

# conda create -n yolov10 python=3.9

# conda activate yolov10

pip install -r requirements.txt

pip install -e .

Cloning into 'yolov10'...

remote: Enumerating objects: 4583, done.

remote: Counting objects: 100% (4583/4583), done.

remote: Compressing objects: 100% (1270/1270), done.

remote: Total 4583 (delta 2981), reused 4576 (delta 2979), pack-reused 0

Receiving objects: 100% (4583/4583), 23.95 MiB | 1.55 MiB/s, done.

Resolving deltas: 100% (2981/2981), done.

Please go to

https://github.com/THU-MIG/yolov10.gitDownload the source code zip package from the website.

cd yolov10

# conda create -n yolov10 python=3.9

# conda activate yolov10

pip install -r requirements.txt

pip install -e .

The dataset used in this article is freedownload link:https://download.csdn.net/download/FriendshipTang/88045378

yolo detect train data=../datasets/Road_Sign_VOC_and_YOLO_datasets/road_sign.yaml model=yolov10n.yaml epochs=100 batch=4 imgsz=640 device=0

yolo predict model=runsdetecttrain4weightsbest.pt source=E:/mytest/datasets/Road_Sign_VOC_and_YOLO_datasets/testset/images

yolo detect val data=../datasets/Road_Sign_VOC_and_YOLO_datasets/road_sign.yaml model=runsdetecttrain4weightsbest.pt batch=4 imgsz=640 device=0

[1] YOLOv10 source code address:https://github.com/THU-MIG/yolov10.git

[2] YOLOv10 paper address:https://arxiv.org/abs/2405.14458

- Due to my limited level, errors and omissions are inevitable. I welcome your criticism and correction.

- For more exciting content, click herePython daily operationsColumn,OpenCV-Python small applicationColumn,YOLO SeriesColumn,Natural Language ProcessingColumn or mineHomepageCheck

- Face disguise detection based on DETR

- YOLOv7 trains its own data set (mask detection)

- YOLOv8 trains its own dataset (soccer ball detection)

- YOLOv5: TensorRT accelerates YOLOv5 model reasoning

- YOLOv5:IoU、GIoU、DIoU、CIoU、EIoU

- Playing with Jetson Nano (V): TensorRT accelerates YOLOv5 target detection

- YOLOv5: Add SE, CBAM, CoordAtt, ECA attention mechanism

- YOLOv5: Interpretation of yolov5s.yaml configuration file, adding small target detection layer

- Python converts COCO format instance segmentation dataset to YOLO format instance segmentation dataset

- YOLOv5: Use version 7.0 to train your own instance segmentation model (for instance segmentation of vehicles, pedestrians, road signs, lane lines, etc.)

- Use Kaggle GPU resources to experience the Stable Diffusion open source project for free