2024-07-12

한어Русский языкEnglishFrançaisIndonesianSanskrit日本語DeutschPortuguêsΕλληνικάespañolItalianoSuomalainenLatina

Support vector machine (SVM) is an efficient supervised learning algorithm widely used in classification and regression problems. It distinguishes data points of different categories by finding an optimal hyperplane in the feature space. The goal is to maximize the interval between two types of data points, thereby improving the generalization ability of the model. The key concepts of SVM include hyperplane, interval, support vector and kernel function. The kernel function allows SVM to handle nonlinear problems and find linearly separable hyperplanes by mapping data to a higher-dimensional space. In addition, soft interval and regularization techniques are used to deal with the non-complete linear separability of data while controlling model complexity and preventing overfitting. The implementation of SVM involves selecting a suitable kernel function, constructing and solving a convex quadratic programming problem, and evaluating and applying the trained model. Its advantages are that the model is simple, easy to implement, and has good generalization ability, but it has high computational complexity, is sensitive to kernel function and parameter selection, and may encounter performance bottlenecks when processing large-scale data sets.

🏘️🏘️个人主页:Paying respect to mountains and rivers。

🎖️🎖️:A rising star creator in the Python field, certified as a rising star by CSDN, a content partner of CSDN, an expert blogger in the Alibaba Cloud community, a mentor for the Rising Star Program, and an in-service data analyst.

💕💕悲索之人烈焰加身,堕落者不可饶恕。永恒燃烧的羽翼,带我脱离凡间的沉沦。

| type | Column |

|---|---|

| Python Basics | Python Basics - Detailed Edition |

| Python Advanced | Python Basics - Module Edition |

| Python Advanced | Python网络爬虫从入门到精通🔥🔥🔥 |

| Web full stack development | Getting started with Django basics |

| Web full stack development | Introduction to HTML and CSS Basics |

| Web full stack development | JavaScript Basics |

| Python Data Analysis | Python数据分析项目🔥🔥 |

| Machine Learning | 机器学习算法🔥🔥 |

| AI | AI |

Support Vector Machine (SVM) is a powerful machine learning algorithm that is mainly used to solve classification and regression problems. It is based on the principle of structural risk minimization in statistical learning theory and distinguishes different data categories by finding an optimal decision boundary, namely a hyperplane, in the feature space. The choice of this hyperplane aims to maximize the shortest distance from the data point to the hyperplane, which is called the margin. The larger the margin, the better the generalization ability of the model is usually.

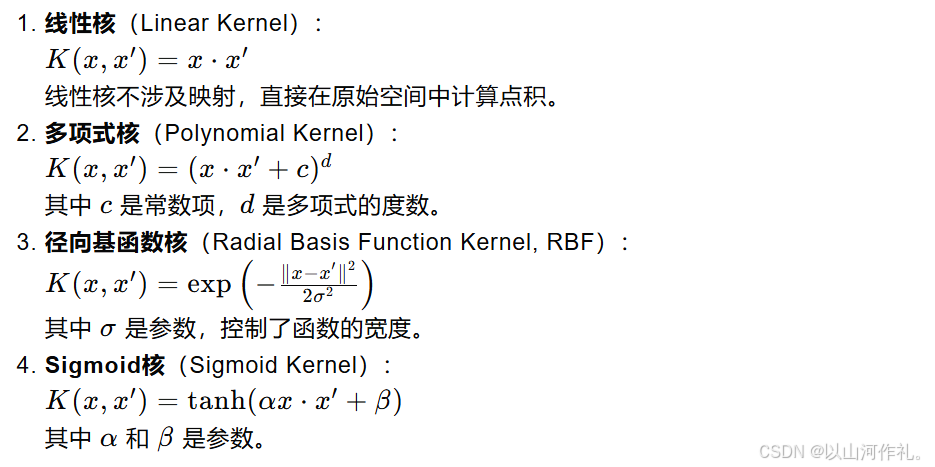

The core of SVM is the support vector, which is a set of data points that are crucial to determine the position and direction of the hyperplane. They are the points closest to the hyperplane. If the data is not linearly separable, SVM maps the original data to a higher-dimensional space by introducing a kernel function, and searches for a linearly separable hyperplane in this new space. Commonly used kernel functions include linear kernels, polynomial kernels, radial basis function (RBF) kernels, etc.

In order to deal with noise and outliers in the data, SVM introduces the concept of soft margin, which allows some data points to be misclassified in exchange for better generalization performance. At the same time, the complexity of the model is controlled by regularization terms to avoid overfitting. The training process of SVM usually involves solving a convex quadratic programming problem to find the optimal hyperplane parameters.

See the figure below, in a two-dimensional environment, points R, S, G and other points close to the middle black line can be regarded as support vectors, which can determine the specific parameters of the classifier, that is, the black line.

Support vectors and hyperplanes are the core concepts in the Support Vector Machine (SVM) algorithm. Below I will explain these two concepts in detail:

In mathematics, a hyperplane is a linear subspace that has one dimension lower than the space it exists in. For example, in two-dimensional space, a hyperplane is a straight line; in three-dimensional space, it is a plane; in higher-dimensional spaces, it is still a linear boundary, but it may be difficult to understand intuitively.

In SVM, a hyperplane is used to separate data into different categories. For a two-dimensional space, you can imagine the hyperplane as a straight line that divides the space into two parts, each containing data points of one category. For a higher dimensional space, the hyperplane is a higher dimensional linear boundary that is also used to separate data points.

Support vectors are those data points that are located closest to the hyperplane. They are the key data points that the SVM uses during training to determine the position of the hyperplane. If you remove any of these points, it will change the position and direction of the hyperplane.

Support vectors are important because they define the boundaries (i.e., intervals) between data points. The goal of SVM is to find a hyperplane that maximizes the distance (interval) between the nearest support vectors (i.e., the data points closest to the hyperplane) and the hyperplane. The size of this interval is an important indicator of the generalization ability of the model.

In practical applications, data may not be linearly separable. In this case, SVM can use kernel techniques to deal with nonlinear problems. The kernel function can map the original data to a higher-dimensional space and find a linearly separable hyperplane in the new space. Commonly used kernel functions include linear kernel, polynomial kernel, radial basis function (RBF) kernel, etc.

When processing real data, it may not be possible to find a perfect hyperplane to completely separate all data points. At this time, SVM introduces the concept of soft margin, which allows some data points to be misclassified in exchange for better generalization ability. At the same time, the complexity of the model is controlled by regularization terms (usually the norm of the normal vector) to avoid overfitting.

The distance formula from a point to a hyperplane is used to calculate the shortest distance from a point to a given hyperplane. The hyperplane can be represented by the following equation in n-dimensional space:

in:

w is an n-dimensional normal vector, perpendicular to the hyperplane.

x is an n-dimensional point located in space.

b is the bias term of the hyperplane.

The vertical distance d from point x to this hyperplane can be calculated by the following formula:

这个公式的几何意义是:从点 𝑥 向超平面作垂线,垂足到点 𝑥的距离就是𝑑这个距离也代表了点 𝑥到超平面的“间隔”。在支持向量机中,间隔的大小是非常重要的,因为它与模型的泛化能力有关。SVM的目标是找到这样一个超平面,使得间隔最大化,即所有数据点到这个超平面的距离之和最大。

Optimization Model in Linearly Separable Case

When the data is linearly separable, that is, there is a hyperplane that can perfectly separate data points of different categories, the goal of SVM is to find a hyperplane that maximizes the distance between the two closest data points (i.e., support vectors) and the hyperplane. This distance is called margin.

The hyperplane can be represented as:

Maximum Margin Optimization Problem

The objective function of SVM is to maximize the margin, which can be expressed as:

Introducing Lagrange multipliers

Introducing Lagrange multipliers

Dual Problem

In support vector machines (SVM), slack variables are a mechanism introduced to handle nonlinear separability in data sets. Ideally, if the data is linearly separable, SVM can find a hyperplane that completely separates data points of different categories while maximizing the interval. However, in the real world, many data sets are not completely linearly separable, which requires the use of slack variables to allow some data points to be misclassified, thereby improving the generalization ability of the model.

这里的 𝐶是一个正的调节参数,用于控制模型对误分类的惩罚程度。𝐶的值越大,模型对误分类的惩罚越重,越倾向于找到没有误分类的解;𝐶的值越小,模型对误分类的容忍度越高,越容易找到间隔更大的解,即使这意味着更多的误分类。

Even in the case of nonlinear separability, by using the kernel trick to map the data into a high-dimensional space, combined with slack variables, SVM can still find the hyperplane with the maximum margin.

Kernel function is an important tool in support vector machine (SVM), which allows SVM to effectively handle nonlinear problems in high-dimensional space. The basic idea of kernel function is to map the original data from low-dimensional space to high-dimensional space, and find the linear separability of data in this high-dimensional space.