2024-07-12

한어Русский языкEnglishFrançaisIndonesianSanskrit日本語DeutschPortuguêsΕλληνικάespañolItalianoSuomalainenLatina

PNN (Probabilistica Network neural, reticulum neural probabilisticum) exemplar retis neuralis secundum probabilitatem theoriae fundatum, maxime adhibitum est ad solvendas difficultates classificationes. PNN primum a Makovsky et Masikin anno 1993 proposita est. Valde efficax est algorithmus classificatio.

Principium PNN breviter perstringi potest ut sequentes gradus:

PNN notas habet:

In genere, PNN est algorithmus efficax classificatio et apta ad problemata classificationis in variis campis, ut agnitio imaginis, classificationis textus, etc.

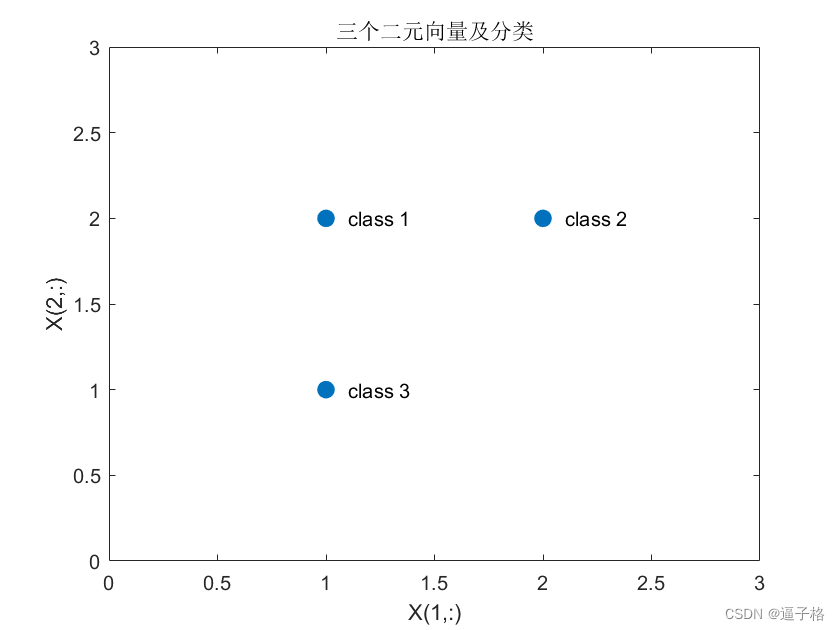

Tres initus binarii sunt vectores X et eorum adiuncti classes Tc.

Facere reticulum probabilisticum neural ad hos vectores recte inserere.

Probabilisticum Neural Network (PNN) est fundamentum radiale retiacula quae apta ad problemata classificationem.

rete = newpnn(P,T, diffundens)% duos vel tres parametros accipit et novam neutralem probabilisticam redit.

P:r - Q matrix Q input vectors

T:s - Q matrix ex Q scopum genus vector

propagatio: Extensio fundationis radialis propagatae functionum (default = 0.1)

Si diffusio prope nulla est, reticulum agit ut proximus classificator proximus. Cum scalis magnus fit, retiaculis designatis plures vectores prope designatos considerat.

[Y,Xf,Af] = sim(net,X,Xi,Ai,T) rete: network

X: initus ad network

XI: conditio initialis input mora (default = 0)

Ai: iacuit initial mora conditio (default = 0)

T: network scopum (default = 0)

- X = [1 2; 2 2; 1 1]';

- Tc = [1 2 3];

- figure(1)

- plot(X(1,:),X(2,:),'.','markersize',30)

- for i = 1:3, text(X(1,i)+0.1,X(2,i),sprintf('class %g',Tc(i))), end

- axis([0 3 0 3])

- title('三个二元向量及分类')

- xlabel('X(1,:)')

- ylabel('X(2,:)')

Convoco scopum genus index T ad vector T *

NEWPNN utere ad designandum network neural y probabilistic

DIDO valorem 1 habet, quia haec distantia typica inter vectores initus est.

- T = ind2vec(Tc);

- spread = 1;

- net = newpnn(X,T,spread);

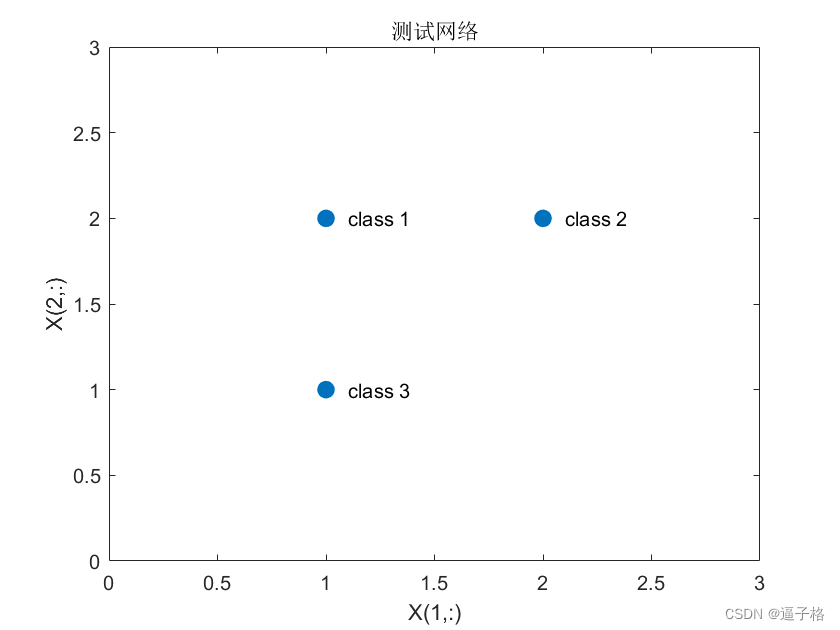

- %测试网络

- %基于输入向量测试网络。通过对网络进行仿真并将其向量输出转换为索引来实现目的。

- Y = net(X);

- Yc = vec2ind(Y);

- figure(2)

- plot(X(1,:),X(2,:),'.','markersize',30)

- axis([0 3 0 3])

- for i = 1:3,text(X(1,i)+0.1,X(2,i),sprintf('class %g',Yc(i))),end

- title('测试网络')

- xlabel('X(1,:)')

- ylabel('X(2,:)')

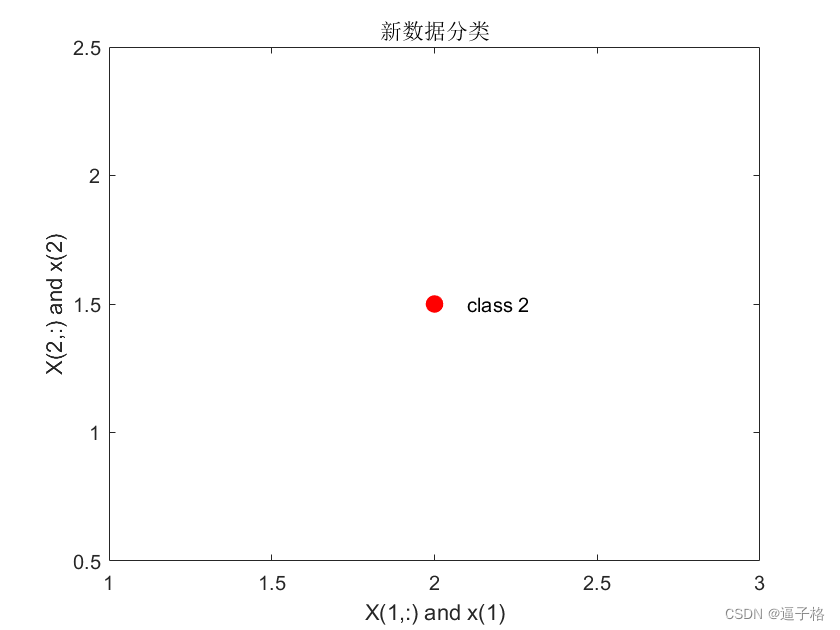

- x = [2; 1.5];

- y = net(x);

- ac = vec2ind(y);

- hold on

- figure(3)

- plot(x(1),x(2),'.','markersize',30,'color',[1 0 0])

- text(x(1)+0.1,x(2),sprintf('class %g',ac))

- hold off

- title('新数据分类')

- xlabel('X(1,:) and x(1)')

- ylabel('X(2,:) and x(2)')

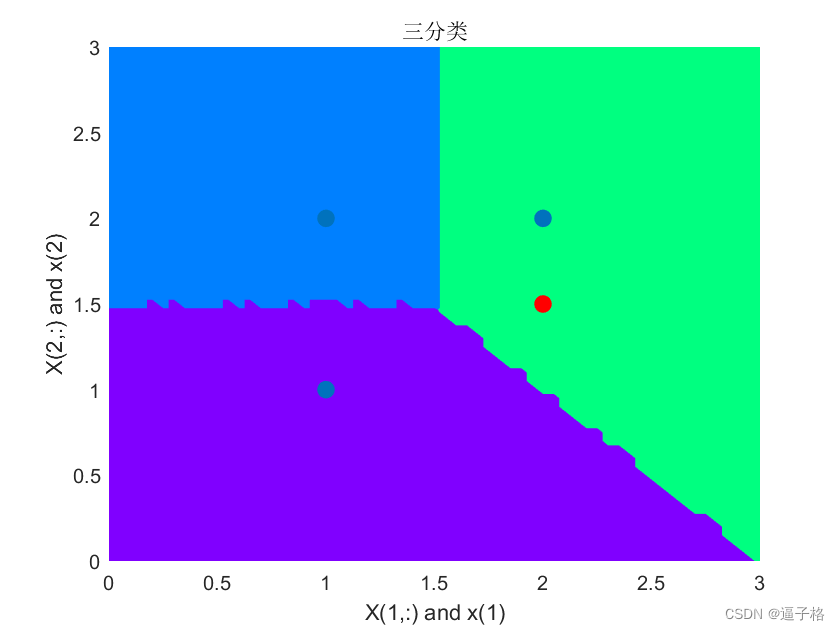

Dividitur in tria genera

- x1 = 0:.05:3;

- x2 = x1;

- [X1,X2] = meshgrid(x1,x2);

- xx = [X1(:) X2(:)]';

- yy = net(xx);

- yy = full(yy);

- m = mesh(X1,X2,reshape(yy(1,:),length(x1),length(x2)));

- m.FaceColor = [0 0.5 1];

- m.LineStyle = 'none';

- hold on

- m = mesh(X1,X2,reshape(yy(2,:),length(x1),length(x2)));

- m.FaceColor = [0 1.0 0.5];

- m.LineStyle = 'none';

- m = mesh(X1,X2,reshape(yy(3,:),length(x1),length(x2)));

- m.FaceColor = [0.5 0 1];

- m.LineStyle = 'none';

- plot3(X(1,:),X(2,:),[1 1 1]+0.1,'.','markersize',30)

- plot3(x(1),x(2),1.1,'.','markersize',30,'color',[1 0 0])

- hold off

- view(2)

- title('三分类')

- xlabel('X(1,:) and x(1)')

- ylabel('X(2,:) and x(2)')

Retis neuralis probabilistica (PNN) est retis artificialis neuralis adhibita ad exemplar classificationis. Fundatur in theorematis Bayesis et exemplar mixtionis Gaussianae et adhiberi potest ad varias notitiarum rationes, inter continuas notitias et notitias discretas. PNN flexibilior est quam retiacula neural traditionalis, cum de classificatione problemata tractant, et facultates generaliores accuratius et generaliores habet.

Praecipuum opus PNN principium est computare similitudinem inter input notitias positas et unumquodque specimen in exemplo statuto, et inputa data secundum similitudinem indica. PNN consist of four layers: Input accumsan, velit accumsan, accumsan elit, ac accumsan elit. Input data primum ad exemplar tabulatum per input iacuit transivit, deinde similitudo per stratum competitionis computatur, et demum in strato in output secundum similitudinem indicatur.

In Matlab, instrumentorum instrumentorum pertinentibus uti potes vel programmate tuo ad classificationem PNN deducendi. Primum, notitias praepositos et probationes praeparare debes, ac deinde exemplar PNN instituendi per datas institutiones instituendi. Postquam disciplina peracta est, certa notitia probata potest aestimare classificationem exsecutionis PNN perpendere et praedicere.

Super, PNN potens est methodus classificationis apta variis quaestionibus classificationis accommodata. In applicationibus practicis, propriae notae et exemplar parametri seligi possunt secundum certas difficultates ad meliorem classificationem perficiendam. Corrige opes instrumentorum et subsidiorum functionis praebet, facilius efficiendi et applicandi PNN faciendi.

- %% 基于概率神经网络(PNN)的分类(matlab)

- %此处有三个二元输入向量 X 和它们相关联的类 Tc。

- %创建 y 概率神经网络,对这些向量正确分类。

- %重要函数:NEWPNN 和 SIM 函数

- %% 数据集及显示

- X = [1 2; 2 2; 1 1]';

- Tc = [1 2 3];

- figure(1)

- plot(X(1,:),X(2,:),'.','markersize',30)

- for i = 1:3, text(X(1,i)+0.1,X(2,i),sprintf('class %g',Tc(i))), end

- axis([0 3 0 3])

- title('三个二元向量及分类')

- xlabel('X(1,:)')

- ylabel('X(2,:)')

- %% 基于设计输入向量测试网络

- %将目标类索引 Tc 转换为向量 T

- %用 NEWPNN 设计 y 概率神经网络

- % SPREAD 值 1,因为这是输入向量之间的 y 典型距离。

- T = ind2vec(Tc);

- spread = 1;

- net = newpnn(X,T,spread);

- %测试网络

- %基于输入向量测试网络。通过对网络进行仿真并将其向量输出转换为索引来实现目的。

- Y = net(X);

- Yc = vec2ind(Y);

- figure(2)

- plot(X(1,:),X(2,:),'.','markersize',30)

- axis([0 3 0 3])

- for i = 1:3,text(X(1,i)+0.1,X(2,i),sprintf('class %g',Yc(i))),end

- title('测试网络')

- xlabel('X(1,:)')

- ylabel('X(2,:)')

- %数据测试

- x = [2; 1.5];

- y = net(x);

- ac = vec2ind(y);

- hold on

- figure(3)

- plot(x(1),x(2),'.','markersize',30,'color',[1 0 0])

- text(x(1)+0.1,x(2),sprintf('class %g',ac))

- hold off

- title('新数据分类')

- xlabel('X(1,:) and x(1)')

- ylabel('X(2,:) and x(2)')

- %% 概率神经网络将输入空间分为三个类。

- x1 = 0:.05:3;

- x2 = x1;

- [X1,X2] = meshgrid(x1,x2);

- xx = [X1(:) X2(:)]';

- yy = net(xx);

- yy = full(yy);

- m = mesh(X1,X2,reshape(yy(1,:),length(x1),length(x2)));

- m.FaceColor = [0 0.5 1];

- m.LineStyle = 'none';

- hold on

- m = mesh(X1,X2,reshape(yy(2,:),length(x1),length(x2)));

- m.FaceColor = [0 1.0 0.5];

- m.LineStyle = 'none';

- m = mesh(X1,X2,reshape(yy(3,:),length(x1),length(x2)));

- m.FaceColor = [0.5 0 1];

- m.LineStyle = 'none';

- plot3(X(1,:),X(2,:),[1 1 1]+0.1,'.','markersize',30)

- plot3(x(1),x(2),1.1,'.','markersize',30,'color',[1 0 0])

- hold off

- view(2)

- title('三分类')

- xlabel('X(1,:) and x(1)')

- ylabel('X(2,:) and x(2)')

-

-

-