2024-07-08

한어Русский языкEnglishFrançaisIndonesianSanskrit日本語DeutschPortuguêsΕλληνικάespañolItalianoSuomalainenLatina

This week I read a paper titled Chasing Fairness in Graphs: A GNN Architecture Perspective. This paper proposes a fair message passing scheme (FMP) guided by a unified optimization framework for graph neural networks (GNNs), which aims to improve the fairness of graph data processing. The scheme is implemented through two core steps: first, aggregating graph data, and then explicitly pursuing representations of the centers of various statistical groups to reduce bias. The method constructs an optimization problem that considers both fairness and data smoothness, and uses Fenchel conjugation and gradient descent techniques combined with the characteristics of the softmax function to efficiently solve it and generate node representations that are both fair and informative. This scheme is directly embedded in GNNs to improve the fairness and accuracy of node classification tasks without the need for data preprocessing. Experimental results show that on real datasets, FMP performs better than multiple baseline models, and its effectiveness is fully verified from the perspectives of model architecture, efficiency, and sensitive attribute utilization.

This week’s weekly newspaper decodes the paper entitled Chasing Fairness in Graphs: A GNN Architecture Perspective. This paper introduces a Fair Message Passing (FMP) scheme guided by a unified optimization framework of Graph Neural Networks (GNNs), aiming to enhance fairness in graph data processing. The FMP achieves this through two core steps: first, aggregating graph data, and then explicitly striving to represent the centers of various statistical groups to mitigate bias. This approach formulates an optimization problem that considers both fairness and data smoothness, and leverages Fenchel duality and gradient descent techniques, combined with the properties of the softmax function, to efficiently solve the problem and generate fair and informative node representations. This scheme is directly embedded into GNNs to improve the fairness and accuracy of node classification tasks, without the need for data preprocessing. Experimental results on real-world datasets show that FMP outperforms multiple baseline models, comprehensively validating its effectiveness from the perspectives of model architecture, efficiency, and the utilization of sensitive attributes.

标题:Chasing Fairness in Graphs: A GNN Architecture Perspective

作者:Zhimeng Jiang1, Xiaotian Han1, Chao Fan2, Zirui Liu3, Na Zou4, Ali Mostafavi1, Xia Hu3

release:Vol. 38 No. 19: AAAI-24

Link: https://doi.org/10.1609/aaai.v38i19.30115

This paper aims to achieve better fairness through a new GNN framework, so it proposes a unified optimization framework designed within the GNNFair Message Passing (FMP)It is worth noting that FMP usesCross Entropy LossExplicitly present the use of sensitive attributes in the forward propagation of node classification tasks, whileNo data preprocessing requiredIn FMP, firstly adoptpolymerizationto use the neighbor's information, and thenBias MitigationThe step explicitly pushes the data statistics group node representation centers together. In this way, the FMP scheme can aggregate useful information from neighbors and alleviate bias to achieve better fairness and prediction trade-off performance.Node ClassificationExperiments on the task show that the proposed FMP outperforms multiple baselines in terms of fairness and accuracy on three real-world datasets.

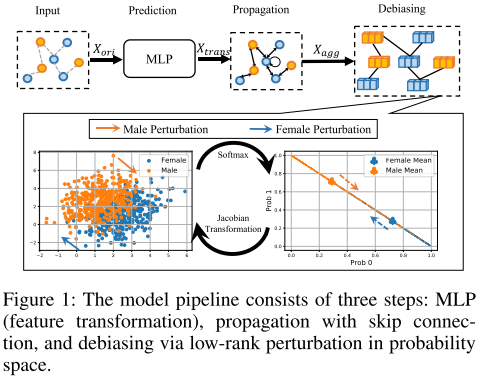

FMP can achieve fair prediction from the perspective of the model backbone. Specifically, fair message passing is formulated as an optimization problem to pursue smoothness and fair node representation simultaneously. Combined with an effective and efficient optimization algorithm, a closed-form fair message passing is derived. Finally, the proposed FMP is integrated into a fair GNN in three stages, including transformation, aggregation, and debiasing steps, as shown in Figure 1. These three stages adopt node features, graph topology, and sensitive attributes, respectively.

min F λ s 2 t r ( F T T ~ F ) 1 2 ∣ ∣ F − X t r a n s ∣ ∣ F 2 λ f ∣ ∣ Δ s S F ( F ) ∣ ∣ 1 (1) min_{mathbf F}frac{lambda_s}{2}tr(mathbf F^T tilde {mathbf T}mathbf F) frac12||mathbf F-mathbf X_{trans}||^2_F lambda_f||Delta_sSF(mathbf F)||_1 tag{1} Fmin2λstr(FTT~F) 21∣∣F−Xtrans∣∣F2 λf∣∣ΔsSF(F)∣∣1(1)

$tilde L represents the normalized Laplace matrix, represents the normalized Laplace matrix,represents the normalized Laplacian matrix,h_s(·)$ and h f ( ⋅ ) h_f(·) hf(⋅) represents the smoothness and fairness objectives, X t r a n s ∈ R n × d o u t X_{trans} in R^{n×d_{out}} Xtrans∈Rn×dout is the transformed d o u t d_{out} doutDimension node features. F ∈ R n × d o u t F in R^{n×d_{out}} F∈Rn×dout are aggregated node features of the same matrix size.

The first two terms preserve the similarity of connected node representations, thereby enhancing the smoothness of the graph. The last term enforces fair node representations so that the average predicted probability between different sensitive attribute groups can remain unchanged.

The regularization coefficients λs and λf adaptively control the trade-off between graph smoothness and fairness.

h

s

(

F

)

=

min

F

λ

s

2

t

r

(

F

T

T

~

F

)

1

2

∣

∣

F

−

X

t

r

a

n

s

∣

∣

F

2

h_s(mathbf F)=min_{mathbf F}frac{lambda_s}{2}tr(mathbf F^T tilde {mathbf T}mathbf F) frac12||mathbf F-mathbf X_{trans}||^2_F

hs(F)=Fmin2λstr(FTT~F) 21∣∣F−Xtrans∣∣F2

Smoothness objective hs(·): The adjacency matrix in existing graph message passing schemes is normalized to improve numerical stability and achieve superior performance. From an edge-centric perspective, the smoothness objective enforces that the connected nodes are represented similarly, since

t

r

(

F

T

T

~

F

)

=

∑

(

v

i

,

v

j

)

∈

E

∣

∣

F

i

d

i

1

−

F

i

d

j

1

∣

∣

F

2

(2)

tr(mathbf F^T tilde {mathbf T}mathbf F)=sum_{(v_i,v_j)in {Epsilon}}||frac{mathbf F_i}{sqrt {d_i 1}}-frac{mathbf F_i}{sqrt{d_j 1}}||^2_F tag{2}

tr(FTT~F)=(vi,vj)∈E∑∣∣di 1Fi−dj 1Fi∣∣F2(2)

Fairness target hf(·): The fairness target measures the deviation of the node representation after aggregation. The sensitive attribute event vector Δs represents the sensitive attribute group and group size by summing the sign and absolute value. The sensitive attribute event vector is

Δ

s

=

1