2024-07-12

한어Русский языкEnglishFrançaisIndonesianSanskrit日本語DeutschPortuguêsΕλληνικάespañolItalianoSuomalainenLatina

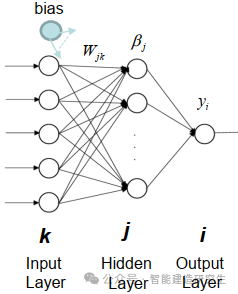

Extrema Cognitio Machina (ELM) simplex iacuit ante algorithmum neurale (SLFN) discendi algorithmus. In theoria, apparatus eruditionis extremae algorithms (ELM) tendunt ad bonum faciendum (ad machinam discendi algorithm pertinentes) cum velocitate discendi summa velocitate propositae sunt ab Huang et al. Praecipuum notum ELM est quod celeritas discendi velocissima est. Cum methodis descensus traditis gradientibus (qualia retiacula neural BP), ELM processum iterativam non requirit. Principium fundamentale est passim eligere pondera et bipennes latentis iacuit, et deinde pondera output discere, obscurando errorem output iacuit.

img

Passim initialize pondera et bipes initus ad stratum absconditum:

Pondera et bationes latentium stratorum passim generantur et constant in disciplina permanent.

Computare matrix output iacuit absconditi (i.e. output functionis activationis):

Computare output iacuit occulti utens functionis activationis (ut sigmoidea, ReLU, etc.).

Computare pondus output:

Pondera ab strato occulto ad stratum output minime quadratis methodo computantur.

Formulae mathematicae ulmus est haec:

Data in disciplina tradenda , ubi ,

Formula calculi output matricis de occulto iacuit est:

ubi est input matrix, pondus matrix input ad stratum absconditum, inclinatio vector est et munus activationis.

Pondus calculi formula output est:

Inter eos, est inversa generativus stratum occulti output matrix et est output matrix.

Magna eu notitia paro processus: ULMUS bene facit cum magnarum rerum datarum processus, quia celeritas eius disciplinae velocissima est et ad missiones apta est quae velocitatem exemplorum institutionem requirunt, ut magnarum imaginum classificationis, linguae naturalis processus et alia opera.

Industria Data : ULMUS amplis applicationes in agro praenuntiationis industrialis habet, ut qualitas moderaminis et armorum defectus praedictionis in processibus productionis industriae. Potest celeriter exempla predictiva instituere et cito ad real-time data respondere.

Oeconomus sector : ULMUS adhiberi potest pro analysi pecuniaria et praenuntiatione, ut praenuntiatio, periculum, procuratio, credit scoring, etc. Cum data pecuniaria plerumque summus dimensiva est, celeritas ELM disciplinae celeriter expedit ad has notitias expediendas.

medical diagnosis : In re medica, ELM adhiberi potest ad operas morborum praesagia et analysis imaginis medicae. Exemplaria celeriter instituere et in notitia patientis inserere vel procedere, medici adiuvantes citius ac accuratius diagnoses facere possunt.

Ratio intelligentis imperium : ULMUS adhiberi potest in rationum potestate intelligentium, ut dolor domos, systemata translationis intelligentes, etc. Discendo characteres et formas de ambitu, ELM adiuvare potest systema facere prudentes decisiones et emendare systema efficientiae et effectus.

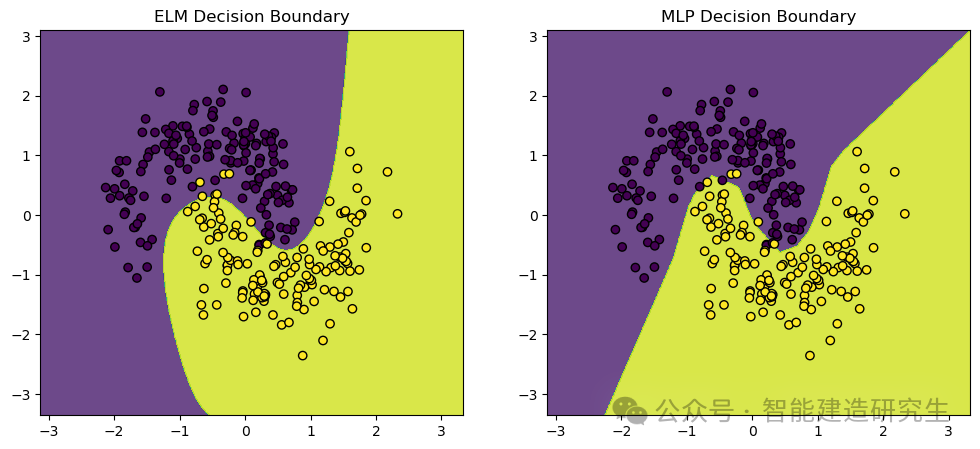

Utimurmake_moons Dataset, ludibrium dataset vulgo ad apparatus discendi et alta discendi classificatione munia adhibita. Puncta generat in duas figuras dimidia lunae secante distributas, specimen ad demonstrandum effectum et decisionem limites classificationis algorithms.

- import numpy as np

- import matplotlib.pyplot as plt

- from sklearn.datasets import make_moons

- from sklearn.model_selection import train_test_split

- from sklearn.neural_network import MLPClassifier

- from sklearn.preprocessing import StandardScaler

- from sklearn.metrics import accuracy_score

-

- # 定义极限学习机(ELM)类

- class ELM:

- def __init__(self, n_hidden_units):

- # 初始化隐藏层神经元数量

- self.n_hidden_units = n_hidden_units

-

- def _sigmoid(self, x):

- # 定义Sigmoid激活函数

- return 1 / (1 + np.exp(-x))

-

- def fit(self, X, y):

- # 随机初始化输入权重

- self.input_weights = np.random.randn(X.shape[1], self.n_hidden_units)

- # 随机初始化偏置

- self.biases = np.random.randn(self.n_hidden_units)

- # 计算隐藏层输出矩阵H

- H = self._sigmoid(np.dot(X, self.input_weights) + self.biases)

- # 计算输出权重

- self.output_weights = np.dot(np.linalg.pinv(H), y)

-

- def predict(self, X):

- # 计算隐藏层输出矩阵H

- H = self._sigmoid(np.dot(X, self.input_weights) + self.biases)

- # 返回预测结果

- return np.dot(H, self.output_weights)

-

- # 创建数据集并进行预处理

- X, y = make_moons(n_samples=1000, noise=0.2, random_state=42)

- # 将标签转换为二维数组(ELM需要二维数组作为标签)

- y = y.reshape(-1, 1)

-

- # 标准化数据

- scaler = StandardScaler()

- X_scaled = scaler.fit_transform(X)

-

- # 拆分训练集和测试集

- X_train, X_test, y_train, y_test = train_test_split(X_scaled, y, test_size=0.3, random_state=42)

-

- # 训练和比较ELM与MLP

-

- # 训练ELM

- elm = ELM(n_hidden_units=10)

- elm.fit(X_train, y_train)

- y_pred_elm = elm.predict(X_test)

- # 将预测结果转换为类别标签

- y_pred_elm_class = (y_pred_elm > 0.5).astype(int)

- # 计算ELM的准确率

- accuracy_elm = accuracy_score(y_test, y_pred_elm_class)

-

- # 训练MLP

- mlp = MLPClassifier(hidden_layer_sizes=(10,), max_iter=1000, random_state=42)

- mlp.fit(X_train, y_train.ravel())

- # 预测测试集结果

- y_pred_mlp = mlp.predict(X_test)

- # 计算MLP的准确率

- accuracy_mlp = accuracy_score(y_test, y_pred_mlp)

-

- # 打印ELM和MLP的准确率

- print(f"ELM Accuracy: {accuracy_elm}")

- print(f"MLP Accuracy: {accuracy_mlp}")

-

- # 可视化结果

- def plot_decision_boundary(model, X, y, ax, title):

- # 设置绘图范围

- x_min, x_max = X[:, 0].min() - 1, X[:, 0].max() + 1

- y_min, y_max = X[:, 1].min() - 1, X[:, 1].max() + 1

- # 创建网格

- xx, yy = np.meshgrid(np.arange(x_min, x_max, 0.01),

- np.arange(y_min, y_max, 0.01))

- # 预测网格中的所有点

- Z = model(np.c_[xx.ravel(), yy.ravel()])

- Z = (Z > 0.5).astype(int)

- Z = Z.reshape(xx.shape)

- # 画出决策边界

- ax.contourf(xx, yy, Z, alpha=0.8)

- # 画出数据点

- ax.scatter(X[:, 0], X[:, 1], c=y.ravel(), edgecolors='k', marker='o')

- ax.set_title(title)

-

- # 创建图形

- fig, axs = plt.subplots(1, 2, figsize=(12, 5))

-

- # 画出ELM的决策边界

- plot_decision_boundary(lambda x: elm.predict(x), X_test, y_test, axs[0], "ELM Decision Boundary")

- # 画出MLP的决策边界

- plot_decision_boundary(lambda x: mlp.predict(x), X_test, y_test, axs[1], "MLP Decision Boundary")

-

- # 显示图形

- plt.show()

-

- # 输出:

- '''

- ELM Accuracy: 0.9666666666666667

- MLP Accuracy: 0.9766666666666667

- '''

Visual output:

Superius contentum ex interreti recapitulatum est. Si utile est, deinceps te vide.