2024-07-12

한어Русский языкEnglishFrançaisIndonesianSanskrit日本語DeutschPortuguêsΕλληνικάespañolItalianoSuomalainenLatina

On July 3, 2024, Shanghai Artificial Intelligence Laboratory and SenseTime, together with the Chinese University of Hong Kong and Fudan University, officially released the next-generation large language model Scholar Puyu 2.5 (InternLM2.5). Compared with the previous generation model, InternLM2.5 has three outstanding highlights:

The reasoning capability has been greatly improved, leading the open source models of the same level at home and abroad, and even surpassing Llama3-70B, which is ten times more powerful, in some dimensions;

Supports 1M tokens context and can process millions of words of long text;

It has powerful independent planning and tool-calling capabilities. For example, it can search hundreds of web pages and conduct integrated analysis for complex problems.

The InternLM2.5-7B model is now available as open source, and larger and smaller models will be released in the near future. Shanghai Artificial Intelligence Laboratory adheres to the concept of "empowering innovation with continuous high-quality open source". While consistently providing high-quality open source models to the community, it will continue to adhere to free commercial licensing.

GitHub link:GitHub - InternLM/InternLM: Official release of InternLM2.5 7B base and chat models. 1M context support HuggingFace model:https://huggingface.co/internlm

Scholar Pu Yu's homepage:Scholar Pu Yu

With the rapid development of large models, the data accumulated by humans is also being consumed rapidly. How to efficiently improve model performance has become a major challenge we are facing. To this end, we have developed a new synthetic data and model flywheel. On the one hand, synthetic data can make up for the lack of high-quality data in the field. On the other hand, the self-iteration of the model can continuously improve data and fix defects, thereby greatly accelerating the iteration of InternLM2.5.

In response to different data characteristics, we have developed a variety of data synthesis technology solutions to ensure the quality of different types of synthetic data, including rule-based data construction, model-based data expansion, and feedback-based data generation.

During the development process, the model itself is also continuously used for model iteration. Based on the current model, we built a multi-agent for data screening, evaluation and annotation, which greatly improved the data quality and diversity. At the same time, the model is also used to produce and refine new corpus, so that the model can fix problems found during the training process.

Powerful reasoning capability is an important foundation for large models to move towards general artificial intelligence. InternLM2.5 optimizes reasoning capability as the core capability of the model, providing a good foundation for the application of complex scenarios.

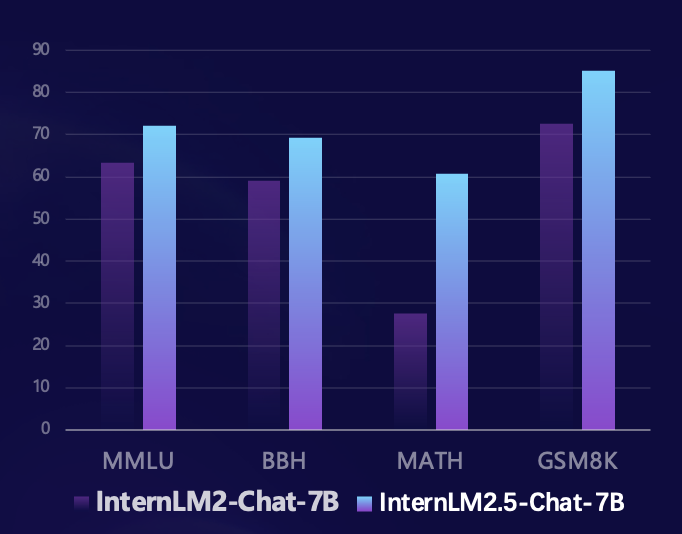

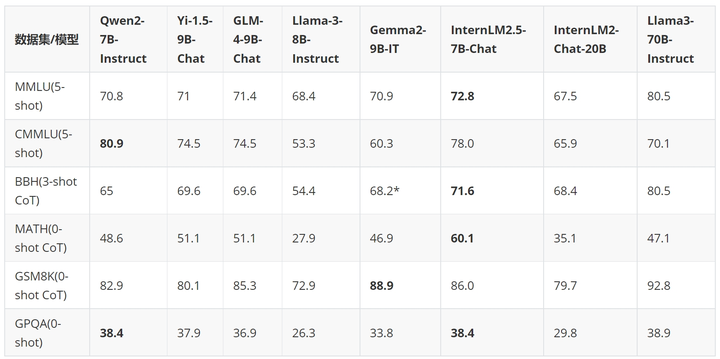

Based on the Sina OpenCompass open source evaluation framework, the research team used a unified and reproducible evaluation method to evaluate on multiple authoritative reasoning ability evaluation sets. Compared with the previous generation model, InternLM2.5 has achieved significant performance improvements on multiple authoritative reasoning ability evaluation sets, especially on the mathematics evaluation set MATH composed of competition problems, which has increased by 100%, and achieved an accuracy of 60% with 7B parameters (reaching the level of GPT-4 Turbo 1106 version), fully demonstrating the model's excellent performance in mathematical reasoning.

In the past few months, the large model open source community has developed rapidly, and a large number of excellent models have emerged. Compared with the latest open source models at home and abroad, InternLM2.5-7B-Chat is ahead of the same-level models in most inference evaluation sets, and has achieved cross-level performance improvement compared to the previous generation model. In some dimensions, it even surpasses Llama3-70B-Instruct, which is ten times larger.

We use an example to demonstrate the excellent reasoning ability of InternLM2.5.

- User:

-

- | 排名 | 国家 / 地区 | 金牌 | 银牌 | 铜牌 | 总计 |

- |------|---------------------|------|------|------|------|

- | 1 | 美国(USA) | 39 | 41 | 33 | 113 |

- | 2 | 中国(CHN) | 38 | 32 | 19 | 89 |

- | 3 | 日本(JPN) | 27 | 14 | 17 | 58 |

- | 4 | 英国(GBR) | 22 | 20 | 22 | 64 |

- | 5 | 俄罗斯奥林匹克委员会(ROC)| 20 | 28 | 23 | 71 |

- | 6 | 澳大利亚(AUS) | 17 | 7 | 22 | 46 |

- | 7 | 荷兰(NED) | 10 | 12 | 14 | 36 |

- | 8 | 法国(FRA) | 10 | 12 | 11 | 33 |

- | 9 | 德国(GER) | 10 | 11 | 16 | 37 |

- | 10 | 意大利(ITA) | 10 | 10 | 20 | 40 |

-

- 根据提供的2020年东京奥运会奖牌榜部分信息,请回答列表中属于北美洲国家和大洋洲国家的银牌奖牌和是多少?

-

- Assistant:

-

- 根据提供的信息,北美洲和大洋洲国家的银牌奖牌数如下:

-

- 北美洲国家:

- - 美国(USA):41枚银牌

- - 加拿大(未在列表中,但作为北美洲国家,其银牌数未提供)

-

- 大洋洲国家:

- - 澳大利亚(AUS):7枚银牌

-

- 因此,北美洲和大洋洲国家的银牌总数为:

- 41(美国)+ 7(澳大利亚)= 48枚银牌。

-

- User:

-

- 根据我提供的信息,现在请你在排名前3的国家中,将金、银、铜牌每一项都至少有15枚的所有国家筛选出来,绘制成表格,请一步步思考来完成这个问题

-

- Assistant:

-

- 好的,让我们一步步来解决这个问题。

-

- 首先,我们需要确定排名前3的国家,根据您提供的信息,这些国家是美国、中国和日本。

-

- 接下来,我们需要筛选出这些国家中,金、银、铜牌每一项都至少有15枚的国家。

-

- 让我们逐一检查这些国家:

-

- 1. 美国(USA):金牌39枚,银牌41枚,铜牌33枚。所有奖牌数都超过了15枚,因此美国符合条件。

-

- 2. 中国(CHN):金牌38枚,银牌32枚,铜牌19枚。所有奖牌数都超过了15枚,因此中国符合条件。

-

- 3. 日本(JPN):金牌27枚,银牌14枚,铜牌17枚。只有金牌数超过了15枚,因此日本不符合条件。

-

- 因此,只有美国和中国符合条件。现在我们可以将这些信息绘制成表格:

-

- | 排名 | 国家 / 地区 | 金牌 | 银牌 | 铜牌 | 总计 |

- |------|---------------------|------|------|------|------|

- | 1 | 美国(USA) | 39 | 41 | 33 | 113 |

- | 2 | 中国(CHN) | 38 | 32 | 19 | 89 |

-

- 请注意,日本(JPN)虽然金牌数超过了15枚,但银牌和铜牌数没有达到要求,因此没有包含在表格中。

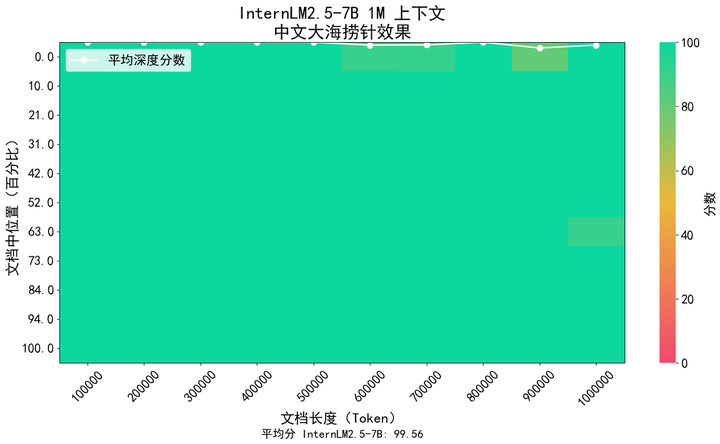

In application scenarios such as long document understanding and complex intelligent agent interactions, the model's context length support has higher requirements. InternLM2.5 proposed a solution, increasing the context length from 200K in the previous generation model InternLM2 to 1M (about 1.2 million Chinese characters), further unleashing the model's potential in ultra-long text applications. In the pre-training of the model, we selected texts with a length of 256K tokens from natural corpus. At the same time, in order to avoid domain shift caused by too single corpus type, we supplemented it with synthetic data, so that the model can retain its capabilities as much as possible while expanding the context.

We used the industry-popular "needle in a haystack" method to evaluate the model's recall of long-text information. The figure below shows that InternLM 2.5 achieved almost perfect needle in a haystack recall within the range of 1M tokens, demonstrating its strong long-text processing capabilities.

In addition, we also used the widely used long-text comprehension ability evaluation set LongBench for evaluation. The results showed that InternLM2.5 achieved the best performance.

| GLM4-9B-Chat-1M | Qwen2-7B-Instruct | Yi1.5-9B-chat | InternLM2.5-7B-Chat-1M | |

| Longbench | 46.5 | 41.2 | 37.2 | 47.4 |

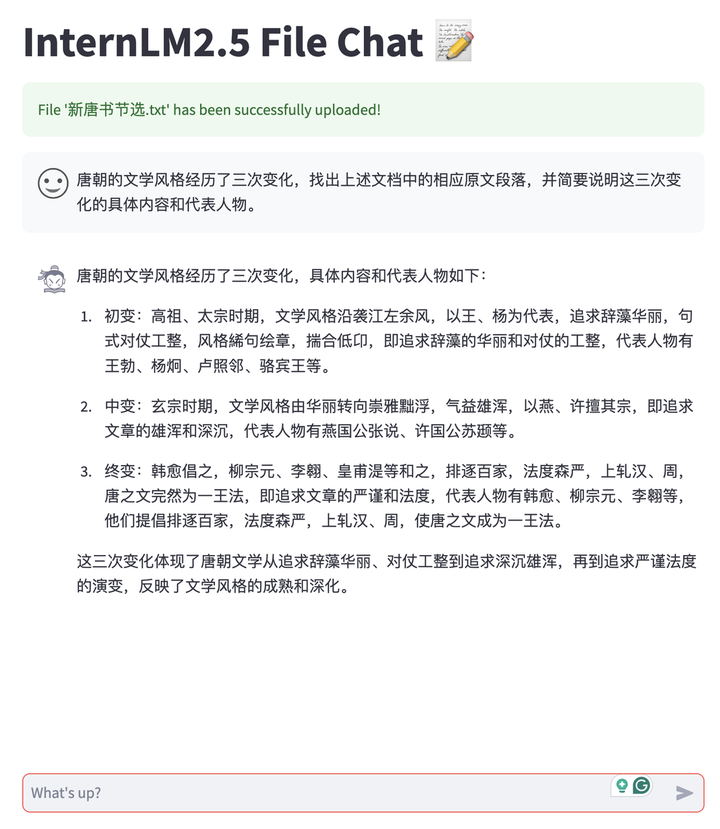

Relying on the long text capabilities of InternLM2.5, we have developed a document dialogue application that supports users to privately deploy models and freely upload documents for dialogue. In addition, the entire system is open source, which is convenient for users to build with one click, including LMDeploy long text reasoning backend support, MinerU multi-type document parsing and conversion capabilities, and Streamlit-based front-end dialogue experience tools. Currently, TXT, Markdown and PDF documents are supported, and various office document types such as Word and PPT will continue to be supported in the future.

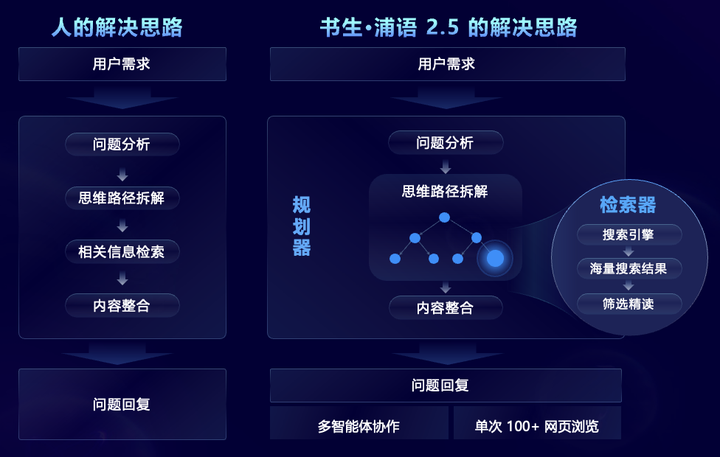

In order to solve complex problem scenarios that require large-scale complex information search and integration, InternLM2.5 innovatively proposed the MindSearch multi-agent framework, which simulates the human thinking process and introduces steps such as task planning, task decomposition, large-scale web search, and multi-source information induction and summary to effectively integrate network information. Among them, the planner focuses on task planning, decomposition, and information induction, and uses graph structure programming to plan and dynamically expand according to the task status. The searcher is responsible for divergent search and summarizing network search results, so that the entire framework can filter, browse, and integrate information based on hundreds of web pages.

With targeted capability enhancement, InternLM2.5 can effectively filter, browse and integrate information from hundreds of web pages, solve complex professional problems, and shorten the research and summary work that humans need to complete in 3 hours to 3 minutes. As shown in the video below, for complex problems with multiple steps, the model can analyze user needs, first search for the technical difficulties of Chang'e 6, then search for corresponding solutions for each technical difficulty, and then compare the Apollo 11 lunar landing plan from four aspects: mission objectives, technical means, scientific achievements, and international cooperation, and finally summarize my country's contribution to the success of lunar exploration.

Scholar Puyu 2.5 is open source, setting a new benchmark for reasoning capabilities

In addition to open source models, Shusheng Pu Yu launched a full-chain open source tool system for large model development and application since July last year, covering six major links: data, pre-training, fine-tuning, deployment, evaluation, and application. These tools enable users to innovate and apply large models more easily, promoting the prosperity and development of the large model open source ecosystem. With the release of InternLM2.5, the full-chain tool system has also been upgraded, expanding the application links and providing new tools for different needs, including:

HuixiangDou Domain Knowledge Assistant (GitHub - InternLM/HuixiangDou: HuixiangDou: Overcoming Group Chat Scenarios with LLM-based Technical Assistance), designed specifically for handling complex technical issues in group chats, suitable for platforms such as WeChat, Feishu, DingTalk, etc., providing complete front-end and back-end web, Android and algorithm source code, and supporting industrial-grade applications.

MinerU intelligent data extraction tool (GitHub - opendatalab/MinerU: MinerU is a one-stop, open-source, high-quality data extraction tool,supports PDF/webpage/e-book extraction.), designed for multimodal document analysis, can not only accurately convert multimodal PDF documents containing mixed images, tables, formulas, etc. into clear and easy-to-analyze Markdown format, but also quickly parse and extract formal content from web pages containing various interference information such as advertisements

In addition to the self-developed full-chain open source tool system, InternLM2.5 actively embraces the community, is compatible with a wide range of community ecological projects, and covers all mainstream open source projects.

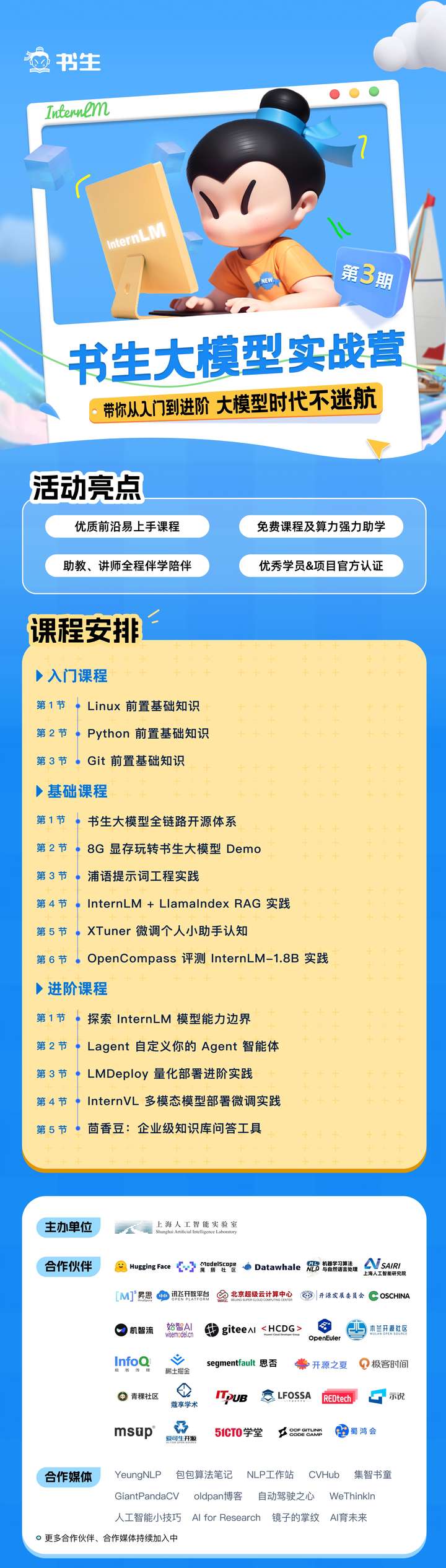

In December last year, Shanghai Artificial Intelligence Laboratory launched the Shusheng·Puyu Big Model Practice Camp, which received unanimous praise from the community. In the past six months, a total of 150,000 people have participated in the learning, and more than 600 ecological projects have been incubated. With the release of InternLM2.5, we also officially announced that the Shusheng·Puyu Big Model Practice Camp has been officially upgraded to the Shusheng Big Model Practice Camp, and more Shusheng Big Model system courses and practices will be gradually added to take you from entry to advanced, so that you will not get lost in the era of big models.

The third Scholar Model Practice Camp will be officially launched from July 10 to August 10. In the practice camp, you will be guided to fine-tune and deploy the InternLM2.5 model. Free computing power and teaching assistants will accompany you throughout the process, as well as authoritative official certificates. Come and join us!Sign upLet’s learn!

Registration link:https://www.wjx.cn/vm/PvefmG2.aspx?udsid=831608

Shusheng Pu Yu empowers innovation with continuous high-quality open source, adheres to open source and free commercial use, and provides better models and tool chains for practical application scenarios.