2024-07-12

한어Русский языкEnglishFrançaisIndonesianSanskrit日本語DeutschPortuguêsΕλληνικάespañolItalianoSuomalainenLatina

auctor:SelectDB Technical team

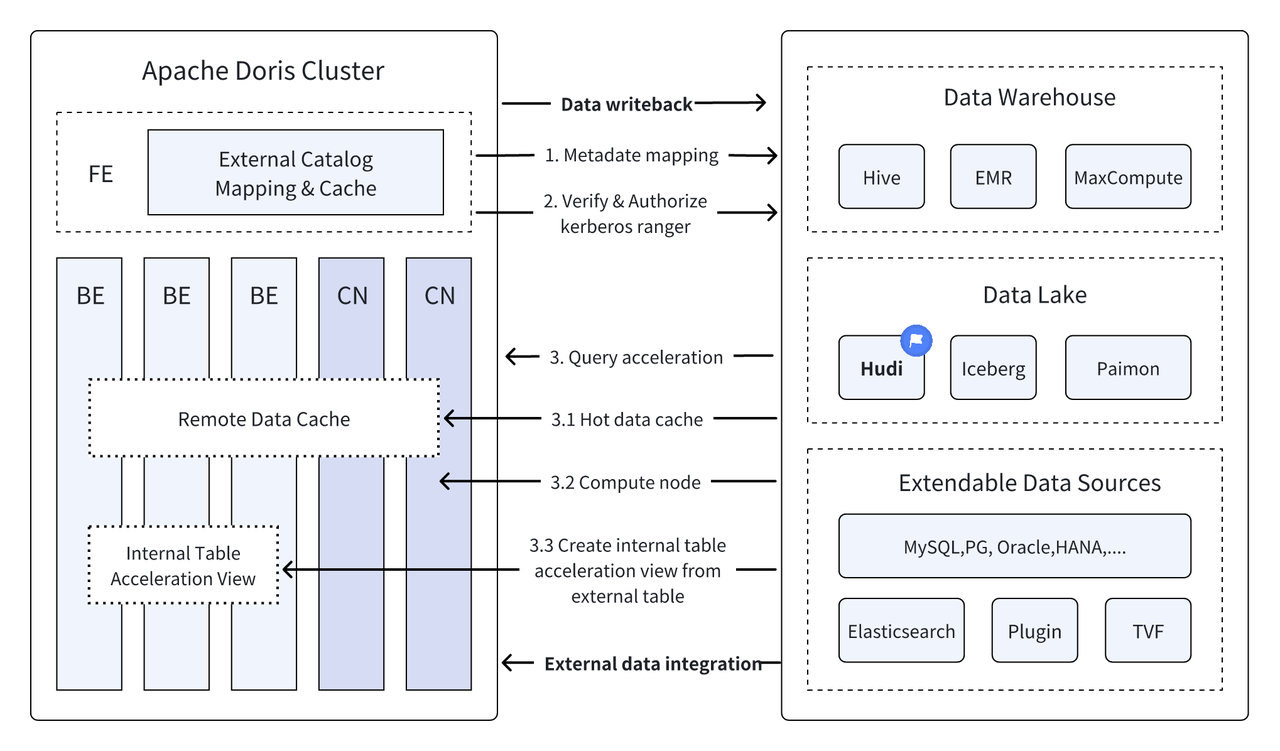

Introductio: Data Lakehouse coniungit altam observantiam et realem temporis observantiam notitiarum horreorum cum parvo pretio et flexibilitate lacus datorum ad auxilium users ad occurrendum varias processus notitias et analyses aptius indiget. In praeteritis multiplicibus versionibus, Apache Doris suam integrationem cum lacu notitiae profundiorem fecit et in maturam integrationem lacum et horreorum solutionem evolvit. Ut utentes faciliores cito incipias, lacum et horreum integram architecturae constructionis Apache Doridis ac varias formas lacus amet datas et systemata reposita per seriem articulorum, incluso Hudi, Iceberg, Paimon, OSS, Delta Lacum inducemus. , Kudu, BigQuery, etc.

Cum nova aperta notitia procuratio architecturae, Data Lakehouse coniungit altam observantiam et realem temporis observantiam notitiarum horreorum cum parvo pretio et flexibilitate lacus notitiae ad auxilium users ad varias notitias occurrendas commodius eget ac magis in coeptis magnis data systematibus.

Praeteritum aliquot versiones;Apache Doris Pergit profundius integrationem suam cum lacu notitiae et nunc in maturam integrationem lacus et horreis solutionem evolvit.

Apache Hudi In sit amet turpis lacus, amet aperta notitia et forma transactionalis lacus diam sit amet, suscipit varias machinas amet interrogationes inter Apache Doris.Apache Doris Apache Hudi datarum lectionum facultates tabulae etiam auctae sunt;

- Effingo in Write Table: Snapshot Query

- Merge on Read Table:Snapshot Queries, Read Optimised Queries

- Support Tempus Travel

- Support Incremental Read

Cum summus perficiendi interrogatione Apache Doridis exsecutio et in reali temporis administratione facultates Apache Hudi datae, efficaces, flexibiles, et humilis sumptus, interrogatione et analysi data possunt effici Munera. Nunc in Apache Coniunctio Doris et Apache Hudi verificata est et provecta in missionibus realibus a pluribus communitatibus utentibus:

Real-time Analysis et dispensando : missiones communes sicut analysis transactionis in industria oeconomico, real-time click stream analysis in vendo industria, et usorum analysin mores in industria e-commercia omnia requirit real-time notitias updates et analysin quaesita. Hudi percipere potest realem tempus renovationis et administrationis notitiarum et in tuto collocare notitiarum constantiam et constantiam .

Data REGRESSUS et auditing : Industriae pro rebus oeconomicis et cura medicae quae altissimas requiruntur ad securitatis et accurationem datae, notitiae regressionis et audiendi munera magni ponderis sunt. Hudi tempus praebet munus peregrinatione, quod utentes ad videndum statum notitiae historicae permittit.

Lectio incremental notitia et analysis: Cum magnas analysin analysin gerendo, saepe versamur ad problemata magnarum rerum datarum ac frequentium updates , Apache Doris Incremental Leges functiones possunt etiam hunc processum efficaciorem facere, signanter meliorem efficientiam processus notitiae et analyseos.

Foederatae queries per data fontes : Multi incepti notitia fontes implicati sunt, et notitia in diversis databases condi potest. Multi-Catalogus Doris munus sustinet automatic mapping et synchronisationi multiplicium notitiarum fontium, ac interrogationes foederatas adiuvat per fontes datos. Pro inceptis, quae notitias multiplicium notitiarum ad analysin acquirendas et integrandas requirunt, valde breviat notitias viae et efficaciam laboris melioris.

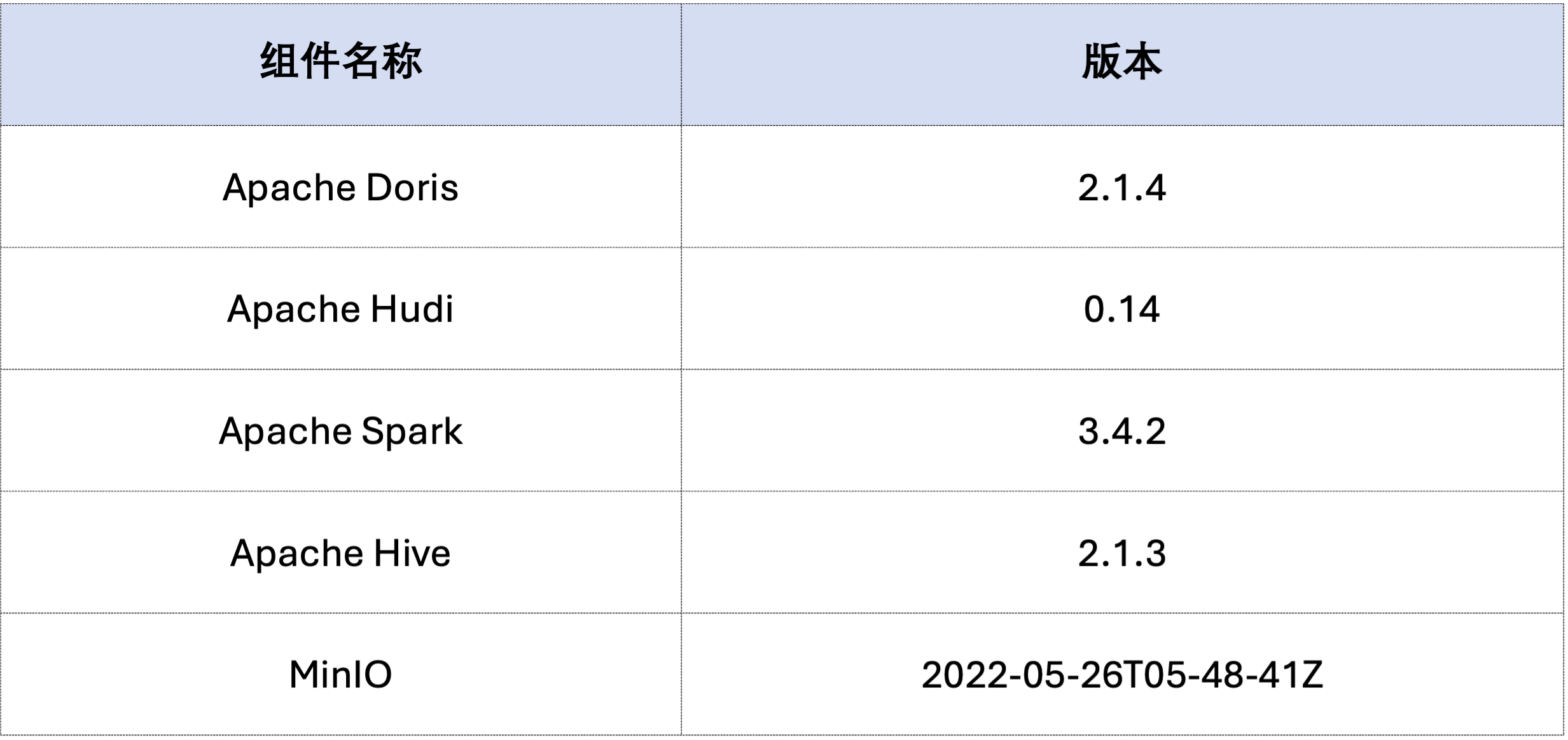

Articulus hic lectores inducet quomodo cito probationem et demonstrationem ambitus Apache Doridis + Apache Hudi in Docker environment erigeret, et operationem cuiusque functionis ad lectores cito incipias demonstrare.

Omnia scripta et codicem, qui in hoc articulum implicantur, ex hac inscriptione haberi possunt:https://github.com/apache/doris/tree/master/samples/datalake/hudi

Exemplum in hoc articulo explicatur utendo Docker Componere.

sudo docker network create -d bridge hudi-net

sudo ./start-hudi-compose.sh

sudo ./login-spark.sh

sudo ./login-doris.sh

Deinde generant Hudi datam per scintillam.Ut in codice infra monstratum est, botrus iam chartam nominatam continetcustomer Alveare mensam, mensam Hudi per hanc alveare mensam creare potes;

-- ./login-spark.sh

spark-sql> use default;

-- create a COW table

spark-sql> CREATE TABLE customer_cow

USING hudi

TBLPROPERTIES (

type = 'cow',

primaryKey = 'c_custkey',

preCombineField = 'c_name'

)

PARTITIONED BY (c_nationkey)

AS SELECT * FROM customer;

-- create a MOR table

spark-sql> CREATE TABLE customer_mor

USING hudi

TBLPROPERTIES (

type = 'mor',

primaryKey = 'c_custkey',

preCombineField = 'c_name'

)

PARTITIONED BY (c_nationkey)

AS SELECT * FROM customer;

Ut infra patebit, lima nomine hudi Catalogue (available viaHOW CATALOGS sisto). The following is the creation constitution of Catalogue:

-- 已经创建,无需再次执行

CREATE CATALOG `hive` PROPERTIES (

"type"="hms",

'hive.metastore.uris' = 'thrift://hive-metastore:9083',

"s3.access_key" = "minio",

"s3.secret_key" = "minio123",

"s3.endpoint" = "http://minio:9000",

"s3.region" = "us-east-1",

"use_path_style" = "true"

);

-- ./login-doris.sh

doris> REFRESH CATALOG hive;

spark-sql> insert into customer_cow values (100, "Customer#000000100", "jD2xZzi", "25-430-914-2194", 3471.59, "BUILDING", "cial ideas. final, furious requests", 25);

spark-sql> insert into customer_mor values (100, "Customer#000000100", "jD2xZzi", "25-430-914-2194", 3471.59, "BUILDING", "cial ideas. final, furious requests", 25);

doris> use hive.default;

doris> select * from customer_cow where c_custkey = 100;

doris> select * from customer_mor where c_custkey = 100;

c_custkey=32 Data iam exsistentia, id est, data exsistentia overwriting;spark-sql> insert into customer_cow values (32, "Customer#000000032_update", "jD2xZzi", "25-430-914-2194", 3471.59, "BUILDING", "cial ideas. final, furious requests", 15);

spark-sql> insert into customer_mor values (32, "Customer#000000032_update", "jD2xZzi", "25-430-914-2194", 3471.59, "BUILDING", "cial ideas. final, furious requests", 15);

doris> select * from customer_cow where c_custkey = 32;

+-----------+---------------------------+-----------+-----------------+-----------+--------------+-------------------------------------+-------------+

| c_custkey | c_name | c_address | c_phone | c_acctbal | c_mktsegment | c_comment | c_nationkey |

+-----------+---------------------------+-----------+-----------------+-----------+--------------+-------------------------------------+-------------+

| 32 | Customer#000000032_update | jD2xZzi | 25-430-914-2194 | 3471.59 | BUILDING | cial ideas. final, furious requests | 15 |

+-----------+---------------------------+-----------+-----------------+-----------+--------------+-------------------------------------+-------------+

doris> select * from customer_mor where c_custkey = 32;

+-----------+---------------------------+-----------+-----------------+-----------+--------------+-------------------------------------+-------------+

| c_custkey | c_name | c_address | c_phone | c_acctbal | c_mktsegment | c_comment | c_nationkey |

+-----------+---------------------------+-----------+-----------------+-----------+--------------+-------------------------------------+-------------+

| 32 | Customer#000000032_update | jD2xZzi | 25-430-914-2194 | 3471.59 | BUILDING | cial ideas. final, furious requests | 15 |

+-----------+---------------------------+-----------+-----------------+-----------+--------------+-------------------------------------+-------------+

Incremental Read is one of the functional features provided by Hudi.Doris enim hoc inserere potestc_custkey=100 Queritur notitia sequens mutationis.Ut infra, inseruimus ac_custkey=32Notitia:

doris> select * from customer_cow@incr('beginTime'='20240603015018572');

+-----------+---------------------------+-----------+-----------------+-----------+--------------+-------------------------------------+-------------+

| c_custkey | c_name | c_address | c_phone | c_acctbal | c_mktsegment | c_comment | c_nationkey |

+-----------+---------------------------+-----------+-----------------+-----------+--------------+-------------------------------------+-------------+

| 32 | Customer#000000032_update | jD2xZzi | 25-430-914-2194 | 3471.59 | BUILDING | cial ideas. final, furious requests | 15 |

+-----------+---------------------------+-----------+-----------------+-----------+--------------+-------------------------------------+-------------+

spark-sql> select * from hudi_table_changes('customer_cow', 'latest_state', '20240603015018572');

doris> select * from customer_mor@incr('beginTime'='20240603015058442');

+-----------+---------------------------+-----------+-----------------+-----------+--------------+-------------------------------------+-------------+

| c_custkey | c_name | c_address | c_phone | c_acctbal | c_mktsegment | c_comment | c_nationkey |

+-----------+---------------------------+-----------+-----------------+-----------+--------------+-------------------------------------+-------------+

| 32 | Customer#000000032_update | jD2xZzi | 25-430-914-2194 | 3471.59 | BUILDING | cial ideas. final, furious requests | 15 |

+-----------+---------------------------+-----------+-----------------+-----------+--------------+-------------------------------------+-------------+

spark-sql> select * from hudi_table_changes('customer_mor', 'latest_state', '20240603015058442');

Doris interrogationem Hudi datae versionis snapshots definiti sustinet, inde tempus Travel munus notitiarum cognoscens. Primum, historiam duarum Hudi tabularum per Scintillam submissionem investigare potes:

spark-sql> call show_commits(table => 'customer_cow', limit => 10);

20240603033556094 20240603033558249 commit 448833 0 1 1 183 0 0

20240603015444737 20240603015446588 commit 450238 0 1 1 202 1 0

20240603015018572 20240603015020503 commit 436692 1 0 1 1 0 0

20240603013858098 20240603013907467 commit 44902033 100 0 25 18751 0 0

spark-sql> call show_commits(table => 'customer_mor', limit => 10);

20240603033745977 20240603033748021 deltacommit 1240 0 1 1 0 0 0

20240603015451860 20240603015453539 deltacommit 1434 0 1 1 1 1 0

20240603015058442 20240603015100120 deltacommit 436691 1 0 1 1 0 0

20240603013918515 20240603013922961 deltacommit 44904040 100 0 25 18751 0 0

deinde perfici potest per Doridem c_custkey=32 , interrogatione data priusquam notitia inserta sit.Ut videre potes infrac_custkey=32 Notitia nondum renovata est:

Nota: Tempus Peregrinationis syntaxum non nunc novum optimizeris sustinet ac primum exsecutioni opus est

set enable_nereids_planner=false;Averte novum optimizer, hoc problema in versionibus subsequentibus figetur.

doris> select * from customer_cow for time as of '20240603015018572' where c_custkey = 32 or c_custkey = 100;

+-----------+--------------------+---------------------------------------+-----------------+-----------+--------------+--------------------------------------------------+-------------+

| c_custkey | c_name | c_address | c_phone | c_acctbal | c_mktsegment | c_comment | c_nationkey |

+-----------+--------------------+---------------------------------------+-----------------+-----------+--------------+--------------------------------------------------+-------------+

| 32 | Customer#000000032 | jD2xZzi UmId,DCtNBLXKj9q0Tlp2iQ6ZcO3J | 25-430-914-2194 | 3471.53 | BUILDING | cial ideas. final, furious requests across the e | 15 |

| 100 | Customer#000000100 | jD2xZzi | 25-430-914-2194 | 3471.59 | BUILDING | cial ideas. final, furious requests | 25 |

+-----------+--------------------+---------------------------------------+-----------------+-----------+--------------+--------------------------------------------------+-------------+

-- compare with spark-sql

spark-sql> select * from customer_mor timestamp as of '20240603015018572' where c_custkey = 32 or c_custkey = 100;

doris> select * from customer_mor for time as of '20240603015058442' where c_custkey = 32 or c_custkey = 100;

+-----------+--------------------+---------------------------------------+-----------------+-----------+--------------+--------------------------------------------------+-------------+

| c_custkey | c_name | c_address | c_phone | c_acctbal | c_mktsegment | c_comment | c_nationkey |

+-----------+--------------------+---------------------------------------+-----------------+-----------+--------------+--------------------------------------------------+-------------+

| 100 | Customer#000000100 | jD2xZzi | 25-430-914-2194 | 3471.59 | BUILDING | cial ideas. final, furious requests | 25 |

| 32 | Customer#000000032 | jD2xZzi UmId,DCtNBLXKj9q0Tlp2iQ6ZcO3J | 25-430-914-2194 | 3471.53 | BUILDING | cial ideas. final, furious requests across the e | 15 |

+-----------+--------------------+---------------------------------------+-----------------+-----------+--------------+--------------------------------------------------+-------------+

spark-sql> select * from customer_mor timestamp as of '20240603015058442' where c_custkey = 32 or c_custkey = 100;

Notitia in Apache Hudi dure dividi potest in duo genera - baseline notitia et notitia incrementalia. Data baseline plerumque est fasciculus Parquet merged, cum notitia incrementalia refert ad incrementum datorum ex INDO, UPDATE vel DELETE. Data baseline directe legi possunt, cum notitia incrementalis legi debet per Merge in Read.

Pro mensa Hudi COW queries seu MOR mensa Read Optimized queries, data baseline notitia, et fasciculi notitia directo per Dorida Parquet Lectorem legi possunt, et perquam celeriter interrogationes responsiones obtineri possunt. Ad data incrementalia, Doris Hudi Javam SDK vocare debet per JNI ut accedere.Ut ad meliorem interrogationem perficiendam consequendam, Apache Doris notitia quaesita in duas partes dividet: baseline et incrementa data, eosque modos superius utentes lege.。

Ad hanc notionem optimization comprobandam, transivimus EXPLAIN propositionis videre quantum baselines notitiae et incrementales notae in interrogatione infra sunt. Pro mensa vacca, omnia 101 shards data sunt baseline data (hudiNativeReadSplits=101/101 ), ut omnes Tabulae Uaccae directe per Doris Parquet Lectorem legi possunt, ut optima quaestio perficiendi obtineri possit. Tabulae pro ROW, pleraeque shards datarum baseline datae sunt (hudiNativeReadSplits=100/101) numerus shardorum est notitia incrementalis et plerumque melius effectum inquisitionis consequi potest.

-- COW table is read natively

doris> explain select * from customer_cow where c_custkey = 32;

| 0:VHUDI_SCAN_NODE(68) |

| table: customer_cow |

| predicates: (c_custkey[#5] = 32) |

| inputSplitNum=101, totalFileSize=45338886, scanRanges=101 |

| partition=26/26 |

| cardinality=1, numNodes=1 |

| pushdown agg=NONE |

| hudiNativeReadSplits=101/101 |

-- MOR table: because only the base file contains `c_custkey = 32` that is updated, 100 splits are read natively, while the split with log file is read by JNI.

doris> explain select * from customer_mor where c_custkey = 32;

| 0:VHUDI_SCAN_NODE(68) |

| table: customer_mor |

| predicates: (c_custkey[#5] = 32) |

| inputSplitNum=101, totalFileSize=45340731, scanRanges=101 |

| partition=26/26 |

| cardinality=1, numNodes=1 |

| pushdown agg=NONE |

| hudiNativeReadSplits=100/101 |

Operationes deletionis aliquas per Scintillam facere potes ut mutationes in Hudi baseline notas et incrementales notas ulteriores observes;

-- Use delete statement to see more differences

spark-sql> delete from customer_cow where c_custkey = 64;

doris> explain select * from customer_cow where c_custkey = 64;

spark-sql> delete from customer_mor where c_custkey = 64;

doris> explain select * from customer_mor where c_custkey = 64;

Praeterea putatio partitio perfici potest per condiciones partitionis ut amplius quantitatem notitiarum minuant et celeritati interrogationis amplio.in exemplo, per partitionem conditionemc_nationkey = 15 Partitionem reductionem praestare ut interrogationi postulata tantum ad unam partitionem accedere necesse sit.partition=1/26) data.

-- customer_xxx is partitioned by c_nationkey, we can use the partition column to prune data

doris> explain select * from customer_mor where c_custkey = 64 and c_nationkey = 15;

| 0:VHUDI_SCAN_NODE(68) |

| table: customer_mor |

| predicates: (c_custkey[#5] = 64), (c_nationkey[#12] = 15) |

| inputSplitNum=4, totalFileSize=1798186, scanRanges=4 |

| partition=1/26 |

| cardinality=1, numNodes=1 |

| pushdown agg=NONE |

| hudiNativeReadSplits=3/4 |

Supra singula ductor est ut celeriter testam/demo ambitum e Apache Doridis et Apache Hudi aedificaturus sit. In posterum etiam seriem ducum deducemus ad aedificandum integratum lacum et architecturae horreorum cum Apache Doridis et variis amet data lacuum. formas et systemata reposita, in quibus Iceberg, Paimon, OSS, Delta Lake, etc., gratam operam dare pergunt.