2024-07-12

한어Русский языкEnglishFrançaisIndonesianSanskrit日本語DeutschPortuguêsΕλληνικάespañolItalianoSuomalainenLatina

Paper address:https://arxiv.org/pdf/2403.10506

Humanoid robots have human-like appearance and are expected to support humans in various environments and tasks. However, expensive and fragile hardware is a challenge for this research. Therefore, this study developed HumanoidBench using advanced simulation technology. This benchmark uses humanoid robots to evaluate the performance of different algorithms, including various tasks such as dexterous hands and complex full-body manipulation. The results show that the state-of-the-artReinforcement Learning AlgorithmsHumanoidBench is an important tool for the robotics community to address the challenges of humanoid robots, providing a platform for rapid verification of algorithms and ideas.

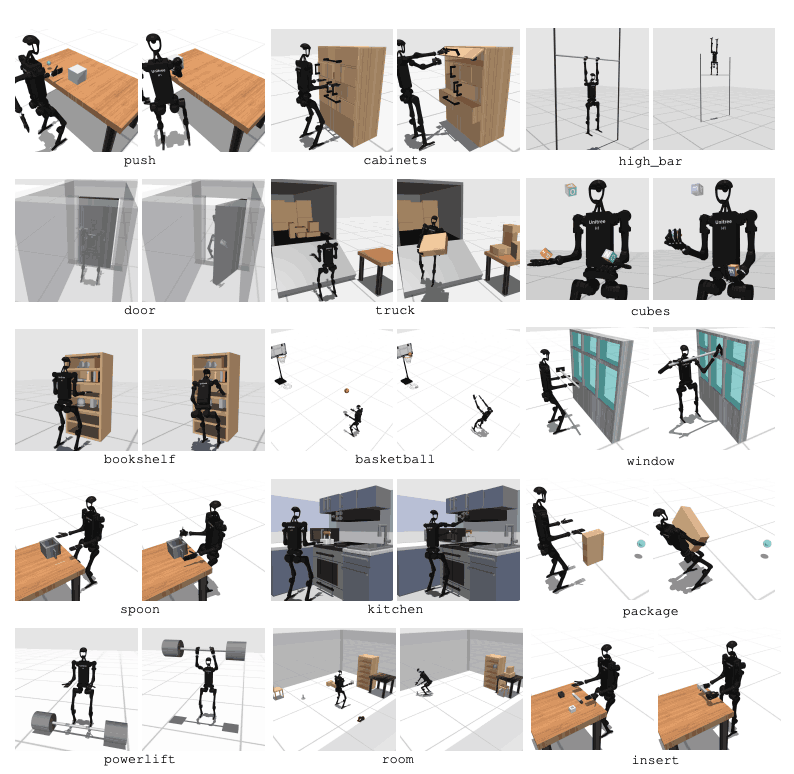

Humanoid robots are expected to integrate seamlessly into our daily lives. However, their controls are manually designed for specific tasks, and new tasks require significant engineering effort. To address this, we developed a benchmark called HumanoidBench to facilitate learning in humanoid robots. This involves a range of challenges including complex control, body coordination, and long-duration tasks. The platform provides a platform for testing robotsLearning AlgorithmsIt provides a safe and inexpensive environment and contains a wide range of tasks related to daily human tasks. HumanoidBench can easily incorporate a variety of humanoid robots and end-effectors, 15 full-body manipulation tasks, and 12 locomotion tasks. This enables state-of-the-art RL algorithms to control the complex dynamics of humanoid robots and provides a direction for future research.

Deep reinforcement learning (RL) is advancing rapidly with the advent of standardized simulation benchmarks. However, existing robotic manipulation simulation environments focus primarily on static, short-term skills and do not involve complex manipulations. In contrast, proposed benchmarks focus on various long-term manipulations. However, most benchmarks are designed for specific tasks, and many use simplified models. There is a need for comprehensive benchmarks based on real hardware.

The main robotic agent is a Unitree H1 humanoid robot with two dexterous shadow hands2. The robot is simulated using MuJoCo. The simulation environment supports a range of observations including robot state, object state, visual observation, and full-body tactile sensing. The humanoid robot can also be controlled using positional control.

To perform tasks similar to humans, robots must be able to understand their environment and take appropriate actions. However, testing robots in the real world is difficult due to cost and safety considerations. Therefore, simulated environments are an important tool for learning and controlling robots.

HumanoidBench includes 27 tasks with a high-dimensional motion space (up to 61 actuators). Locomotion tasks include basic movements such as walking and running. Manipulation tasks include advanced tasks such as pushing, pulling, lifting, and grasping objects.

The purpose of the benchmark is to evaluate how well modern algorithms can accomplish these tasks. The robot needs to observe the state of the environment and choose appropriate actions based on that. Through a reward function, the robot can learn the best strategy to perform the task.

For example, in walking tasks, the robot needs to maintain forward speed without falling. In such tasks, optimizing balance and gait is very important. On the other hand, in manipulation tasks, the robot needs to manipulate objects precisely. This requires understanding the position and orientation of the object and appropriate force control.

The goal of HumanoidBench is to promote advances in the field of robot learning and control through these tasks. Using a simulated environment, researchers can safely conduct experiments and evaluate the performance of robots in many different scenarios. This will help develop better control algorithms and learning methods, thereby promoting the future application of humanoid robots in the real world.

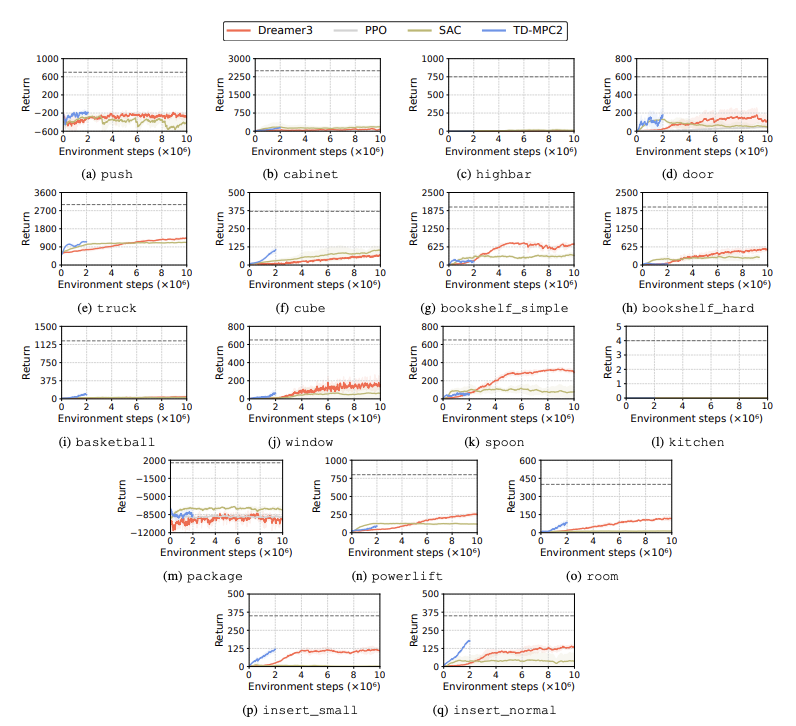

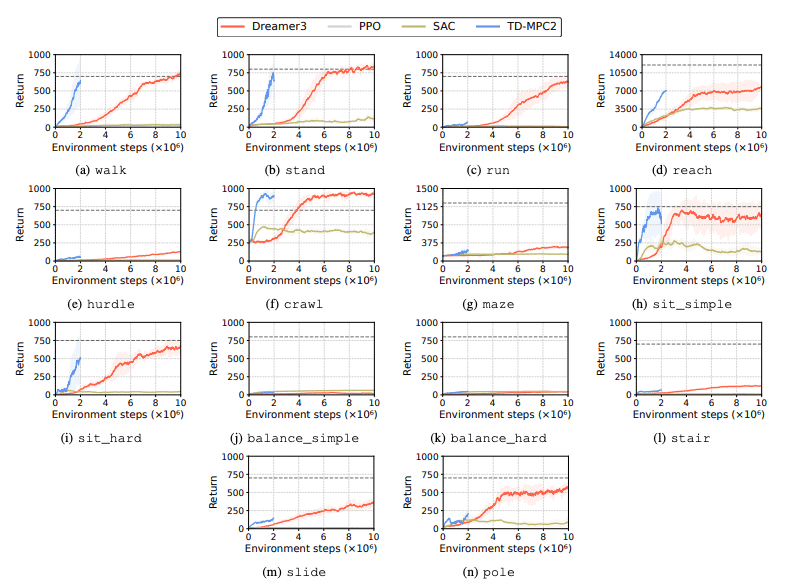

The performance of reinforcement learning (RL) algorithms was evaluated to identify the challenges faced by humanoid robots in learning tasks. Four major reinforcement learning methods were used for this purpose, including DreamerV3, TD-MPC2, SAC, and PPO. The results showed that the baseline algorithms were below the success threshold in many tasks.

In particular, current RL algorithms struggle with high-dimensional action spaces and complex tasks. Humanoid robots especially struggle with tasks that require manual dexterity and complex body coordination. Manipulation tasks are also particularly challenging and often have low rewards.

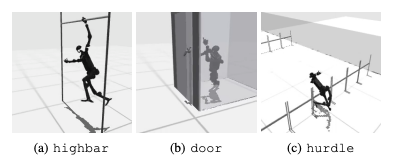

A common failure is that humanoid benchmarks have difficulty learning the expected behavior of a robot in tasks such as high hurdles, gates, and obstacles. This is because it is difficult to find a policy that is appropriate for complex behaviors.

To address these challenges, a hierarchical RL approach is being considered. Training low-level skills and combining them through high-level planning strategies can facilitate task solving. However, current algorithms still have room for improvement.

The study introduced a high-dimensional humanoid named HumanoidBenchRobot controlBenchmark. This benchmark provides a comprehensive humanoid environment, including a variety of motion and manipulation tasks from toys to real applications. The authors hope that it will challenge such complex tasks and promote the development of full-body algorithms for humanoid robots.

In future research, it will be important to study the interaction between different sensing modalities. In addition, consideration will be given to combining more realistic objects and environments with real-world diversity and high-quality renderings. In addition, a focus will be placed on other means of guiding learning in environments where it is difficult to collect physical demonstrations.